Compose Hackathon: Day… the rest

I meant to write this on Friday, but got caught up trying to gather some last bits of data after my dev environment fell over and decided to stop running benchmarks altogether.

Since Wednesday…

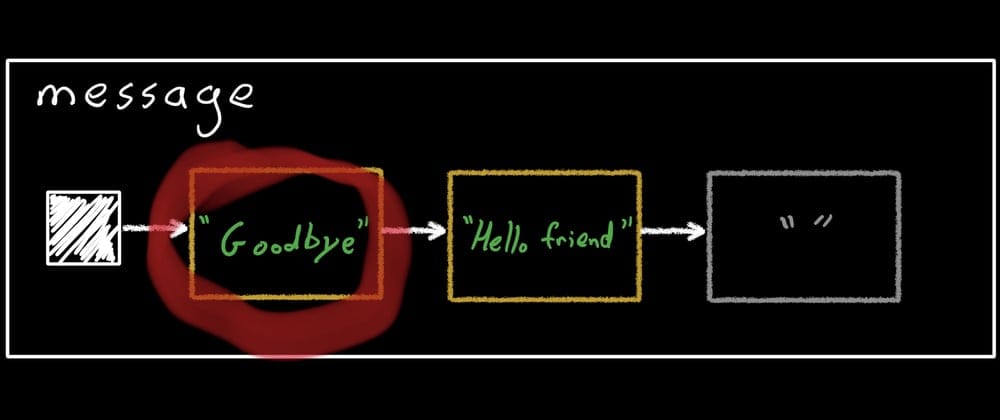

In the last post, I left off having built the piece table I initially meant to. The results were not great. So I spent some time trying to clean up how the pieces themselves were managed, and ensure that after every operation only the minimal number of pieces required to represent the string were left. I also made better use of PersistentList's mutation APIs (you can create a mutable Builder object to more efficiently perform multiple changes at once). After all that, and some other tweaks, I was able to get the numbers down a bit but they were still a lot worse than the original gap buffer.

In these charts, ignore the last bar on the right for now, I'll get into that next. The cyan bar is the result of all the above work.

I also turned on profiling for the Microbenchmark library, by adding this line to the benchmark module's gradle config:

android {

defaultConfig {

testInstrumentationRunnerArguments["androidx.benchmark.profiling.mode"] = 'StackSampling'

}

}

It turns out most of the time was spent in PersistentList code, so I figured it was time to give up on that library and try a different approach. I replaced the list of pieces with a simple linked list, which should, in theory, perform a lot better at adding and removing pieces in the middle of the table. It wasn't. That last bar in the charts above are the linked list, and it's even worse than the persistent list implementation.

A lot of time was also spent in snapshot-related code however – reading and writing state objects is not free, and in the linked list case each operation involved many such operations. And I still hadn't even handled the case where multiple snapshots are writing to the piece table in parallel. In my persistent list implementation, all of the mutable properties of the piece table were stored as immutable fields in a single "record" object inside a MutableState, and in the linked list version every list node pointer was its own MutableState. As I discussed in my (last) Monday post, I figured I'd need to get my hands dirty with snapshot IDs to handle multithreaded contention, and now I was starting to wonder if I could use the state system more efficiently as well.

Getting comfortable with MVCC

Compose's snapshot state system is an implementation of multi-version concurrency control, or MVCC. Coming into this week, I had a kind of hand-wavy idea about how the high-level concepts worked, but was still foggy on some of the details. So I re-watched a short talk Chuck gave internally a few weeks ago on Thursday night, and over coffee Friday morning read through the snapshot state code again, and finally it all clicked. When I was initially thinking through how this could work, I figured that if I could somehow make the piece table aware of whether it was performing multiple mutations in the same snapshot, and no other snapshots had access to the internal, mutable data structures, I could just mutate them in-place and save some work. I figured I'd have to track snapshot IDs manually somehow.

For an introduction to how to how snapshots behave at a high level, and how to use them, see some of my other blog posts.

Turns out, the whole snapshot state system is built to support exactly this use case. I think this is super cool – so cool, in fact, that it deserves its own explainer post. The biggest thing I took away from this hackweek project was not only a better understanding of the snapshot system, but a general technique for making any mutable data structure snapshot-friendly.

But if you don't want to read all that just now, the fundamental points are:

- A mutable snapshot state object internally contains a copy of all of its data for each open snapshot (each copy is called a "record"), and

- Every mutable snapshot state object being written to inside a mutable snapshot (e.g. inside a

Snapshot.withMutableSnapshotblock) gets its own record, that it can only see inside that snapshot, and no other snapshot knows about, until the snapshot is applied/committed.

This means that, internally, a snapshot state object is a mix of mutable and immutable records. Every operation performed in a mutable snapshot has a mutable record that it can write to in-place as long as that snapshot is open, and when the snapshot is applied or committed, that record is effectively "frozen" and becomes immutable, so that it will never be changed again but new snapshots can read from it, and new mutable snapshots will make copies of it for writing to. Also, new records are only created when the object is actually written to. So creating new snapshots (both read-only and mutable) is cheap.

Snapshots and copy-on-write

Snapshot state objects are effectively copy-on-write: When written, they are asked to create a copy of their current state, and then for the rest of the lifetime of the snapshot the copy can be written to directly.

And because the state objects themselves are copy-on-write, you can theoretically make any mutable data structure snapshot-aware by making it copy-on-write internally. The only thing you have to think about is the tradeoff between how expensive it is to create a copy, vs how expensive it is to mutate-in-place. For example, a simple array is about as cheap as you can get for in-place mutation, but to create a copy you have to copy the whole array. On the other hand, persistent data structures like PersistentList are very cheap to copy, but have a little more overhead to change.

Luckily, by this point I've already implemented a piece table using both simple mutable data structures (basically ArrayList) and one using persistent lists. Both of these approaches are very easy to adapt to include an optional copy operation before writing. The linked-list piece table could be adapted as well, but since it ended up being the worst of both worlds performance-wise, and that's without even copying the whole list, I didn't bother exploring it any further.

I was excited by this realization because it meant I could focus on analyzing that tradeoff between copy and write performance, and as long as I ensured the copy-on-write logic worked correctly, the rest of the snapshot state object requirements would be satisfied. And it also meant that the performance of any such data structure should be almost equivalent to its non-snapshot-aware variant in all cases except the first write in a new snapshot. This is very useful characteristic for something like a text editing buffer, which needs to perform multiple internal operations for a single external write, and which might be asked to perform multiple writes in quick succession (IME commands can be sent in a batch that needs to be applied atomically).

It also means, despite performing any number of internal operations for a single external write, we only need to do a single "snapshot write", which involves a small amount of its own overhead so minimizing them is a win.

Applied learning

So with this in mind, and the hackweek demo session fast approaching, I quickly set to work Friday morning modifying my original piece table implementation (the ArrayList one) to be copy-on-write. Unfortunately, since all of the benchmarks I was using operate without knowledge of snapshots, which means they're all effectively working in the global snapshot, they only measure the "write" part of "copy-on-write", and not the "copy" part. If I end up working on this more, I would add additional benchmarks, but I was ok ignoring this for now since the hottest code modifying a data structure like this should probably be all done inside a single snapshot anyway, for isolation and atomic change notification.

It was extremely easy to make this change, since all I had to do was:

- Make a record class that stored the length, add buffer, piece list, and a

writeableflag indicating whether the lists needed to be copied for this record. - Inside the mutating

replacemethod, get the current writeable record (using the Snapshot APIStateRecord.writable) check thewriteableflag and, if not set, replace the lists with copies and set the flag on the record.

The rest of the piece table logic stayed exactly the same, just working on the list from the record.

Unfortunately, I was not able to compare the performance of this new implementation with the others, since my dev environment decided to completely stop working with the phone I'd been running benchmarks on, and I ended up having to switch phones and re-run all the benchmarks on that device for an accurate comparison (so in the charts below, don't try to compare the actual numbers with the charts above). The results were very promising: the new piece table (cyan) ran much quicker than all the other snapshot-aware implementations I built (yellow, green, orange), and only a little slower than the non-snapshot-aware one (red).

Of course, the gap buffer is faster, and requires a lot fewer allocations, than all the piece tables. And I haven't had the time to build this, but the gap buffer should also be adaptable using the same technique. Gap buffers are extremely fast at adjacent writes because they end up being simple array writes. The only expensive operation on a gap buffer is when writing to a different place, since it requires moving the gap, which means shifting/copying a potentially large (even the entire) array – this is why the gap buffer does so poorly in the "random, discontinuous writes in large text" benchmark. In a copy-on-write adaptation, we could mitigate this because when we make the copy, we already know where we need to move the gap to, so since we're copying the whole array anyway we can move the gap at the same time. So, again, the resulting snapshot-aware gap buffer should have almost identical performance to the non-snapshot-aware one, except when performing the first write in a new snapshot.

And now that we know how to easily make both these data structures snapshot-aware, it even should be easy to adopt a hybrid approach where we switch internal data structures on the fly when the buffer surpasses a certain size.

Lastly, we get one more bonus feature: it becomes extremely cheap to make a complete copy of data structures implemented in this way, for example to store in an undo stack. The data structure simply needs to create a new instance of itself with a copy of the current record, and flag its own current record as needing another copy-on-write (e.g. by internally performing the read inside a new snapshot, which will cause the snapshot system to do that automatically).

Conclusion

As far as I'm concerned, this hackweek was an overwhelming success. I got a much better understanding of how the snapshot system can actually be used to implement complex data structures, and got enough data to be reasonably confident that we could feasibly ship a snapshot-aware text editing buffer in production. That opens up some exciting possibilities for cleaning up the existing internal text(field) code, as well as potential new public text rendering and editing APIs.

To summarize my highlights,

- The Jetpack Microbenchmark library is super useful for measuring and optimizing data structures.

- Implementing data structures from scratch is really fun, especially when it's to solve an actual real-world problem and not just pass a course.

- Given any fancy, complex mutable data structure, you can give it the isolation, consistency, and atomicity guarantees of the snapshot system by simply making it copy-on-write and wrapping in a thin layer of

StateObject+StateRecordboilerplate (although you'll probably want to measure and explore further optimizations from there). - Gap buffers > piece tables.

If you made it this far, thanks for following along, and ~stay tuned~ check out this post for a deeper dive into how to actually implement a StateObject!

Update: Added links to the state object implementation blog post.